My Projects

Here are some of the projects I've worked on. Each represents different skills and technologies I've mastered. Some are technical heavy such as the CERN data project. Others are light and fun like my card game creator. Code can solve lots of problems!

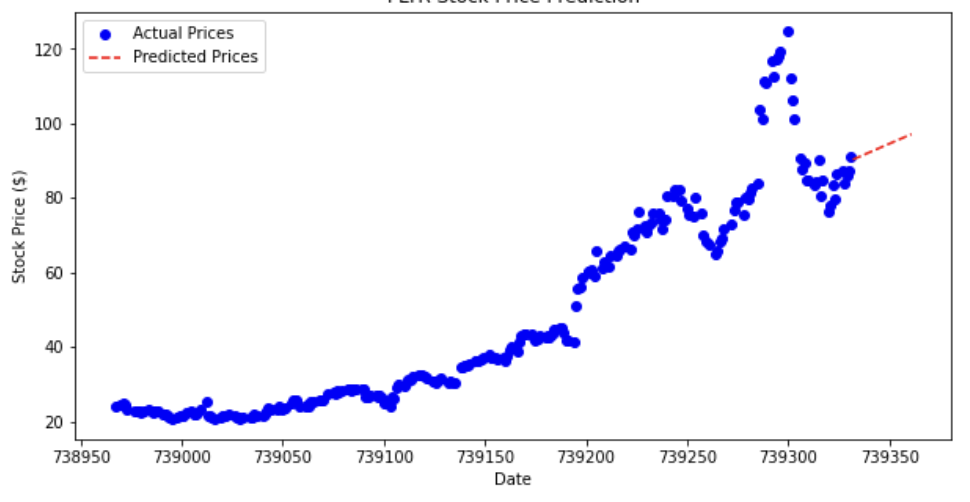

I want to get into data anlaysis in the financial markets. There is a lot of rich pattern analysis I can analyze and...

I used to live in DC, so I created code which would automatically get me a picture from a satellite of the current...

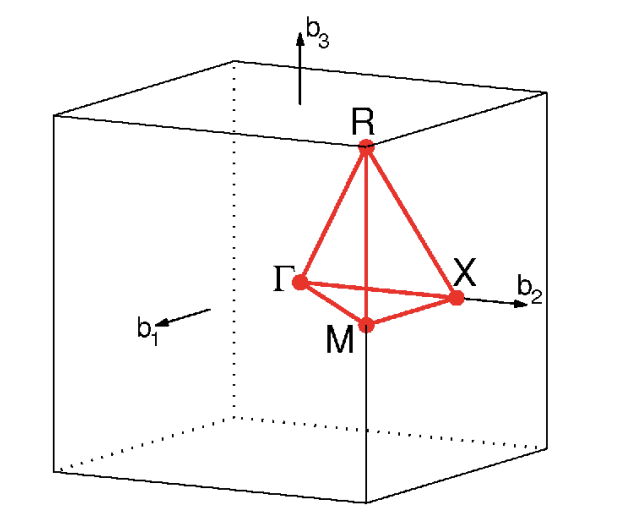

Electronic Structure Theory analysis using Quantum Espresso and Supercomputer. It was written in a Bash script with...

It was written in a Bash script with parralellized computations, and analysis and plotting was done in Python.

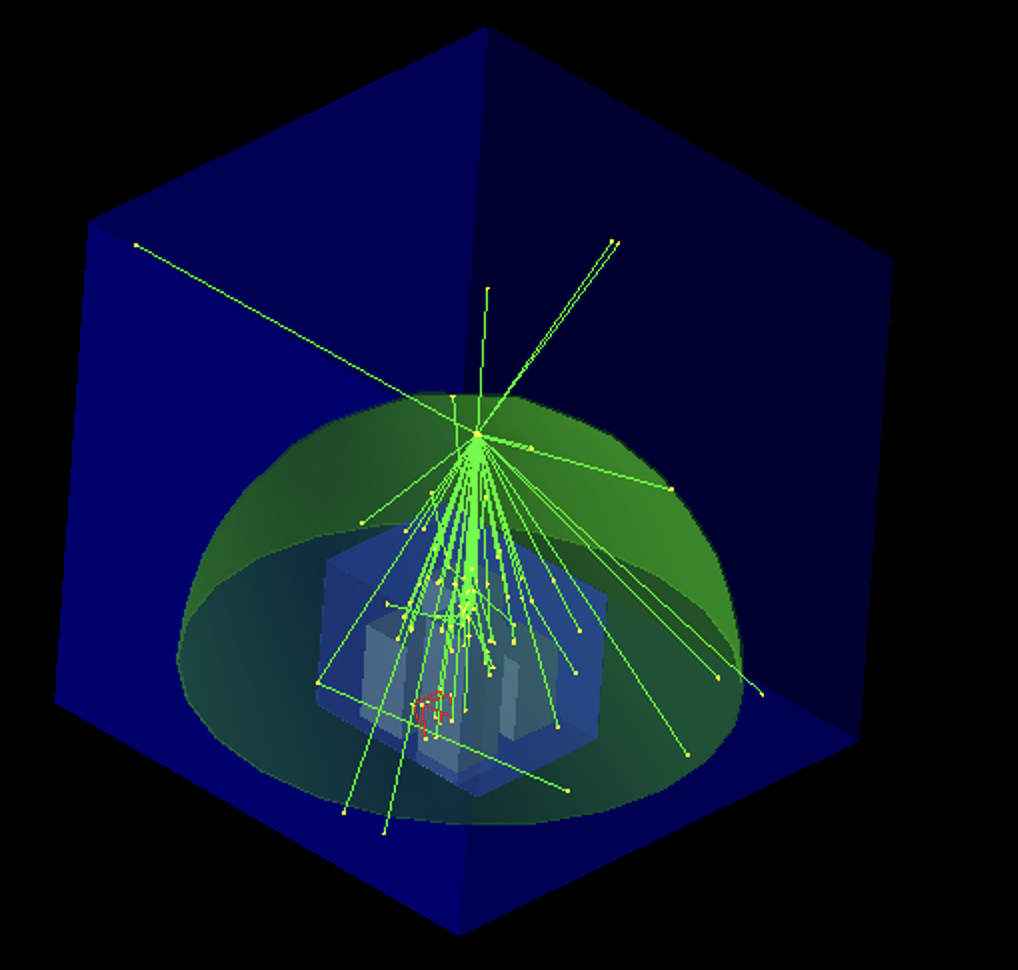

The CERN_ThomasLanning folder has all my work in it. It is a C++ simulation of particle collider experiments and a...

The Analysis.ipynb is where the story starts. You will probably want to read it through to understand my detector structure since it is a bit unconventional...

There are two data folders: data_energy_range and Improved_data

the first one is for the not-improved analysis

the second one is for the improved analysis -There are some pictures in the folder: I use these in the jupyter notebook so need them there.

There is also some data in the main folder: that is just some exploratory data I use at the start of my notebook

Finally: The folder Project_improved contains my improved version of the simulation The folder Project_not_improved is my initial version. - I edited both of the src code after compiling it last, but only with comments so when you rerun the code, it might recompile for that reason.

Enjoy :)

This code implements a hybrid model to play Hangman using both Reinforcement Learning (RL) and a GPT-based language...

The HangmanEnv class sets up the game environment, taking a word and initializing lives, guessed letters, and a word state with underscores for unguessed letters.

The 'step()' function updates the game state based on the guessed letter, reducing lives if the letter is guessed incorrectly or updating the word state if the letter is in the word.

The DQN model predicts the next letter based on the current game state, processing input through four layers with dropout and layer normalization.

The HangmanLLM class uses a GPT-2 architecture to predict the next letter from a partially completed word. If GPT cannot predict a letter, it falls back to the RL model.

The HybridHangmanModel combines both models, switching between the RL model (when many letters are missing) and the GPT model (when fewer letters are missing).

The models are loaded from saved checkpoints or initialized with random weights.

The model's performance is tested on both predefined words and 100,000 randomly selected words from a dictionary, with the win rate evaluated by the number of games won out of 100,000.

I bought codenames duet but the tile cards were missing so I created a Flask app which generates tiles for me to...